Implicit Interactive Fleet Learning (IIFL)

Mar 23, 2023

AutoLab at UC Berkeley.Contributed to preliminary paper of Implicit Interactive Fleet Learning (IIFL). Acknowledged in the final paper published at the Conference on Robot Learning (CoRL) 2023. Special thanks to Anrui Gu, Gaurav Datta, and Ryan Hoque for inviting me into the project and the work done together!

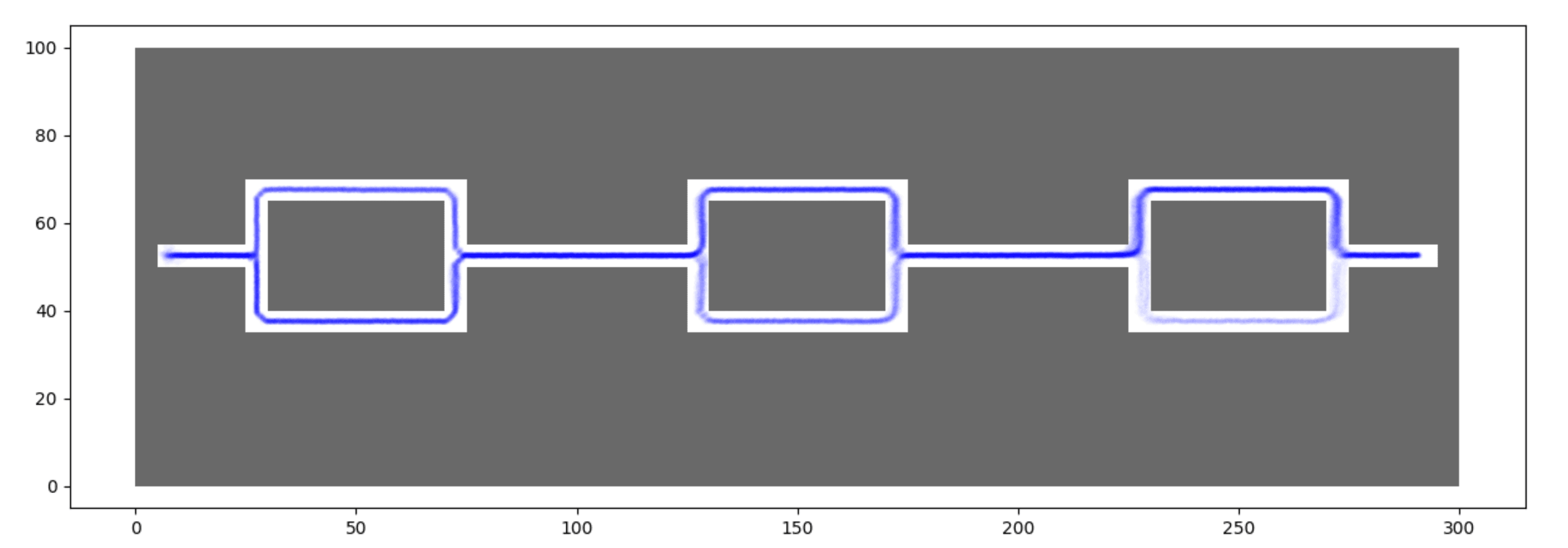

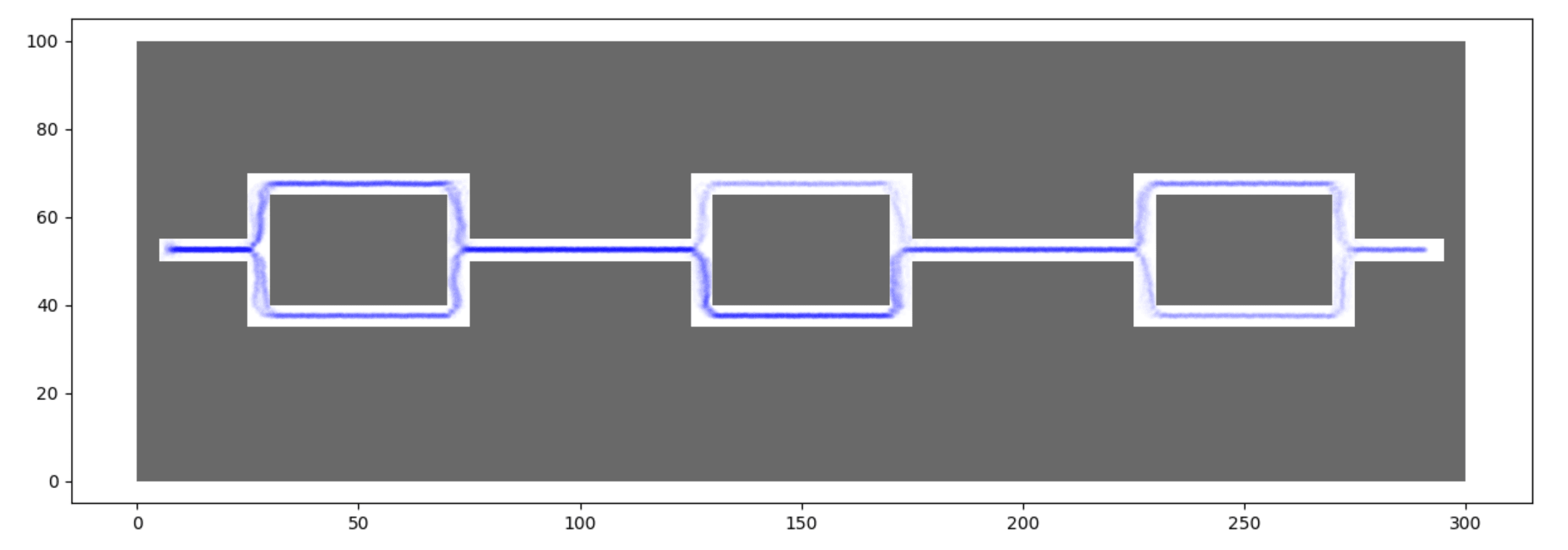

The goal of the project was to reduce the effect of distributional shift induced by imitation learning for robotic navigation tasks. In general, Behavioral Cloning (BC) has the disadvantage of not being able to cover edge cases gracefully. Interactive Fleet Learning (IFL) is a method that addresses this issue by collecting human feedback on the loop, effectively reducing the distributional shift. However, it does not consider the fact that human data might differ from individual to individual, and that feedback might be noisy. Implicit Behavioral Cloning (IBC) has been observed to be a promising alternative to capture multi-modal data through energy-based models. As a result, Implicit Interactive Fleet Learning (IIFL) emerged as a learning strategy that combines the benefits of IFL and IBC to learn a policy that is robust to distributional shift and noisy human feedback.At the beginning of the project, we compared both BC and IBC across 100 rollouts and observed that BC performed better than IBC, which at the moment was counterintuitive. BC and IBC got success rates of 90% and 32% respectively, following trajectories as the ones shown in the plots below.

BC rollouts over navigation map (100 samples).

IBC rollouts over navigation map (100 samples).

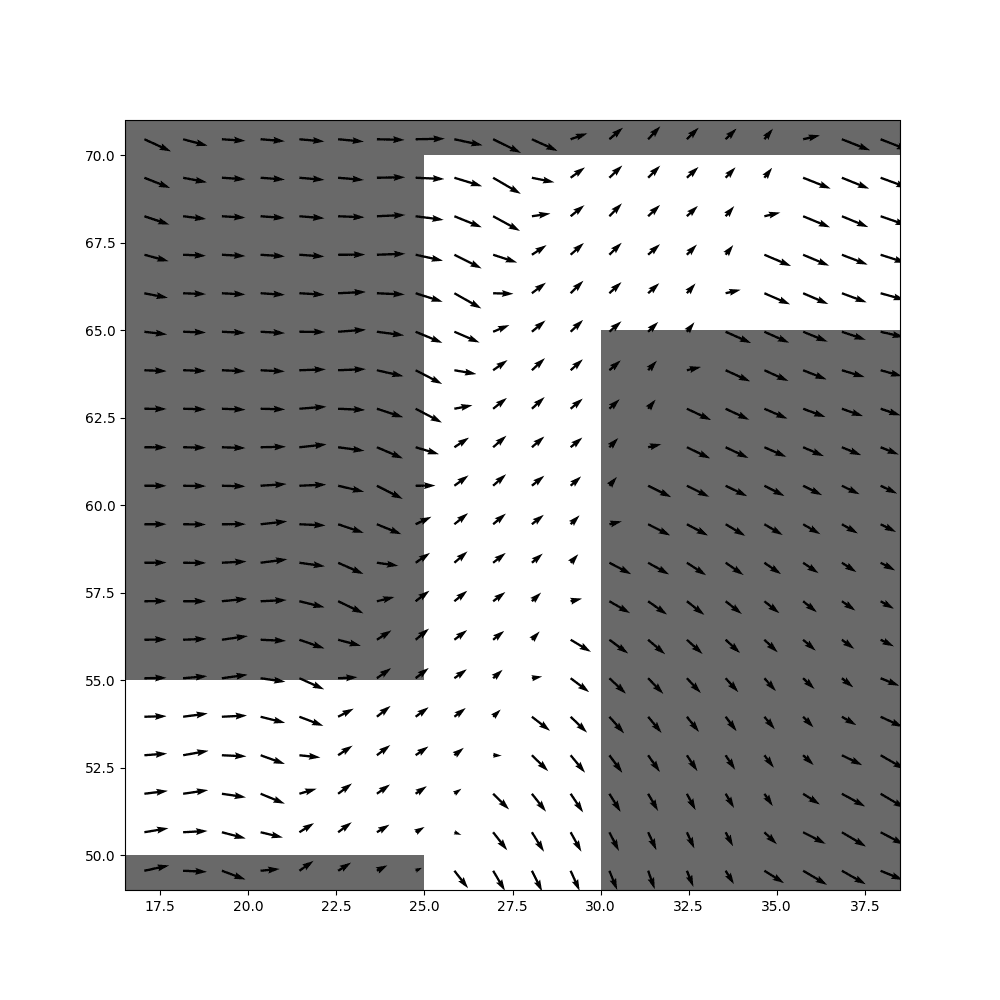

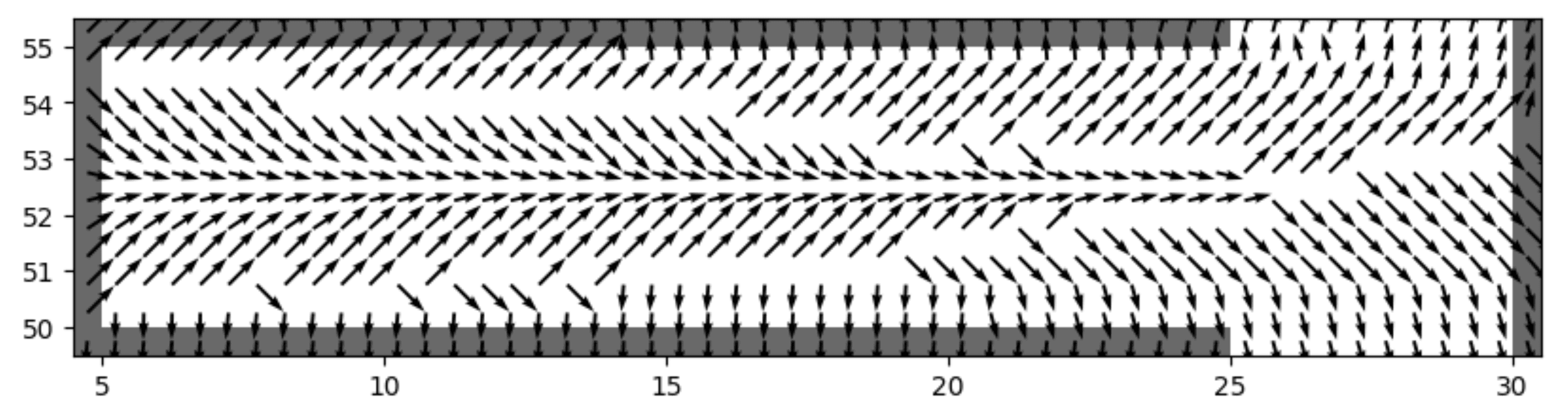

Action-selection vector field for DART over navigation map.

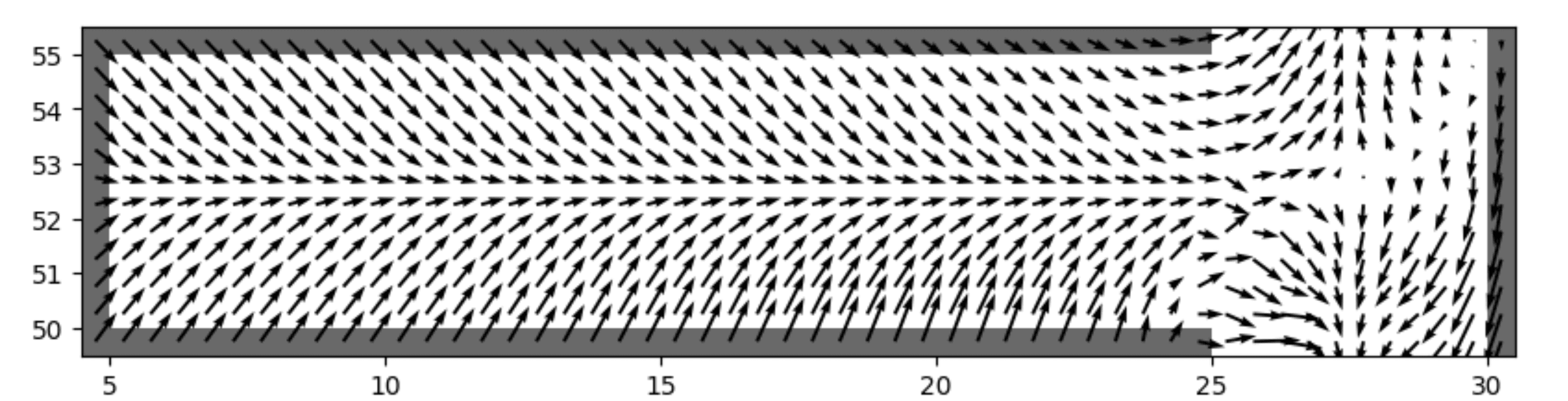

The vector field shows the action that the robot would take at each point in the navigation map. The length of the vectors indicates the magnitude of the action, and the direction of the vectors indicates the direction of the action. With this tool in hand, we were able to take a closer look at the performance of BC and IBC as shown in the following plots.

Action-selection vector field for BC over navigation map.

Action-selection vector field for IBC over navigation map.

The vector field for BC shows a clear pattern that keeps the robot in the center of the path, avoiding collisions with the walls. On the other hand, the vector field for IBC shows only a narrow path through the center of the map, with diverging vectors nearby that take the robot closer to the walls if any noise is introduced. This analysis was the first step in identifying issues with IBC in the navigation task, which were later overcome both in the same setup and in Isaac Gym simulations.

In this work, my responsibilities primarily revolved around research engineering: implementing algorithms (DART), coding custom environments (navigation maps and Isaac Gym simulations), automating the parallel and distributed execution of training/evaluation scripts across GPUs and servers, and performing hyperparameter search sweeps with Weights and Biases.